Full Lecture 2

Correspondence Theorem

Let [math]P_{X}\left(\cdot\right)[/math] and [math]P_{Y}\left(\cdot\right)[/math] be probability functions, defined on [math]\mathcal{B}\left(\mathbf{R}\right)[/math] and let [math]F_{X}\left(\cdot\right)[/math] and [math]F_{Y}\left(\cdot\right)[/math] be associated cdfs. Then,

[math]P_{X}\left(\cdot\right)=P_{Y}\left(\cdot\right)[/math] iff [math]F_{X}\left(\cdot\right)=F_{Y}\left(\cdot\right).[/math]

The correspondence theorem assures us that we can restrict ourselves to cdfs. Relying on these won’t restrict us in any way, when compared to using probability functions.

CDFs

Function [math]F:\mathbf{R}\rightarrow\left[0,1\right][/math] is a cdf if it satisfies the following conditions:

- [math]\lim_{x\rightarrow-\infty}F\left(x\right)=0.[/math]

- [math]\lim_{x\rightarrow+\infty}F\left(x\right)=1.[/math]

- [math]F\left(\cdot\right)[/math] is non-decreasing.

- [math]F\left(\cdot\right)[/math] is right-continuous (this can be shown by using probability functions of intervals).

Nature of RVs

We now define the natures of random variables:

- Random variable [math]X[/math] is discrete if

[math]\exists f_{X}:\mathbf{R}\rightarrow\left[0,1\right][/math] s.t. [math]F_{X}\left(x\right)=\sum_{t\leq x}f_{X}\left(t\right),x\in\mathbf{R}.[/math]

Function [math]f_{X}[/math] is called the probability mass function (pmf).

- Random variable [math]X[/math] is continuous if

[math]\exists f_{X}:\mathbf{R}\rightarrow\mathbf{R}_{+}[/math] s.t. [math]F_{X}\left(x\right)=\int_{-\infty}^{x}f_{X}\left(t\right)dt,x\in\mathbf{R}.[/math]

Any such [math]f_{X}[/math] is called a probability density function (pdf). Notice that unlike pmfs, multiple pdfs are consistent with a given cdf. This occurs as long as the pdfs differ only on a set of (probability) measure-zero events.

Another interesting remark is that the probability of any specific value of a continuous variable is zero, i.e., [math]P\left(\left\{ x\right\} \right)=0,\forall x\in\mathbf{R}[/math].

Examples

Coin tossing

[math]F_{X}\left(x\right)=\begin{cases} 0, & x\lt 0\\ \frac{1}{2}, & 0\leq x\lt 1\\ 1, & x\geq1 \end{cases}[/math]

In this case, [math]X[/math] is discrete and [math]F_{X}[/math] is a step function (this always occurs for discrete r.v.s).

The probability mass function is equal to [math]f_{X}\left(x\right)=\begin{cases}

\frac{1}{2}, & x\in\left\{ 0,1\right\} \\

0, & otherwise

\end{cases}[/math].

Uniform distribution on (0,1)

[math]F_{X}\left(x\right)=\begin{cases} 0, & x\lt 0\\ x, & 0\leq x\lt 1\\ 1, & x\geq1 \end{cases}[/math] where [math]X[/math] is continuous.

Moreover, both [math]f_{X}\left(x\right)=\begin{cases} 1, & x\in\left[0,1\right]\\ 0, & otherwise \end{cases}[/math] and [math]f_{X}\left(x\right)=\begin{cases} 1, & x\in\left(0,1\right)\\ 0, & otherwise \end{cases}[/math] are consistent pdfs.

Normal distribution

A r.v. [math]X[/math] has a standard normal distribution, [math]X\sim N\left(0,1\right)[/math], if it is continuous with pdf

[math]f_{X}\left(x\right)=\frac{1}{\sqrt{2\pi}}e^{-\frac{x^{2}}{2}},x\in\mathbf{R}[/math]

PMFs and PDFs

Notice that pmfs and, in a sense pdfs, ‘add up’ to one. There is a theorem that states the result applies in both directions.

For the pmf,

[math]f:\mathbf{R}\rightarrow\left[0,1\right][/math] is the pmf of a discrete r.v. iff [math]\sum_{x\in\mathbf{R}}f\left(x\right)=1.[/math]

And for the pdf,

[math]f:\mathbf{R}\rightarrow\mathbf{R}_{+}[/math] is the pdf of a continuous r.v. iff [math]\int_{-\infty}^{\infty}f\left(x\right)dx=1.[/math]

Remark

It’s clear from the examples above, that one can specify the distribution of a random variable by specifying its distribution function, or its probability mass/density function. Sometimes, however, it is advantageous to specify the distribution of a random variable by a transformation. For example, suppose [math]Y[/math] is defined as a random variable that follows the distribution of [math]X^{2}[/math], where [math]X\sim N\left(0,1\right)[/math]. This takes us to discussing transformations of random variables. But first, we'll see a very useful result.

Leibniz Rule

This rule can be useful in a series of domains. It states that

[math]\frac{d}{dx}\int_{a\left(x\right)}^{b\left(x\right)}f\left(x,t\right)dt=\int_{a\left(x\right)}^{b\left(x\right)}\frac{\partial}{\partial x}f\left(x,t\right)dt+f\left(x,b\left(x\right)\right)b'\left(x\right)-f\left(x,a\left(x\right)\right)a'\left(x\right).[/math]

This means that the derivative of an integral can written as the integral of a derivative, plus functions of the integrands and of the integration limits. The case where [math]b\left(x\right)[/math] and [math]a\left(x\right)[/math] are constant follows immediately.

For this rule to apply, we require [math]f\left(x,t\right)[/math] and its partial derivative w.r.t. [math]x[/math] to be continuous, and that both limits of integration are continuously differentiable. We also note that this rule can be derived from the chain rule of differentiation (see this proof).

Improper Integrals

When the integral is improper (i.e., one of the limits is infinity), Leibniz rule may fail despite all relevant functions being otherwise well-behaved.

At the crux of this problem is whether [math]\lim_{h\rightarrow0}\int_{0}^{\infty}\frac{f\left(x+h,t\right)-f\left(x,t\right)}{h}dt=\int_{0}^{\infty}\lim_{h\rightarrow0}\frac{f\left(x+h,t\right)-f\left(x,t\right)}{h}dt[/math].

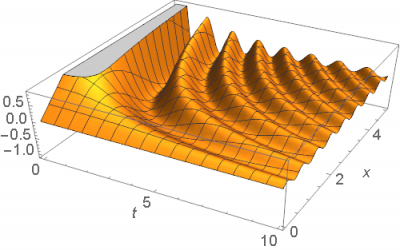

Consider the example of the following function:

[math]f\left(x,t\right)=\frac{\sin\left(tx\right)}{t},[/math]

plotted below:

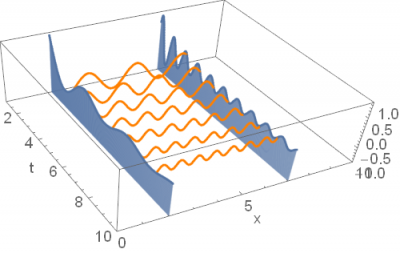

First, notice that by calculating the expression of interest directly, [math]\frac{d}{dx}\int_{0}^{\infty}f\left(x,t\right)dt[/math], we learn how the area under [math]f\left(x,t\right)[/math] along the [math]t[/math] axis changes when [math]x[/math] is moved slightly. In order to see this, consider the following plot of [math]\frac{\sin\left(tx\right)}{t}[/math], shown now only for specific values of [math]t[/math] and [math]x[/math].

For now, we will focus on the blue sections, along which [math]x[/math] is fixed. We can think of [math]\frac{d}{dx}\int_{0}^{\infty}f\left(x,t\right)dt[/math] as first calculating the area under each of the blue curves, and then calculating how those areas change as a function of [math]x[/math]. By calculating this expression directly, we obtain

[math]\frac{d}{dx}\int_{0}^{\infty}f\left(x,t\right)dt=\frac{d}{dx}\frac{\pi}{2}sign\left(x\right),[/math]

which equals zero at [math]x\neq0[/math] and is infinite at [math]x=0[/math]. This is the correct answer: As [math]x[/math] changes slightly, the area under [math]f\left(x,t\right)[/math] remains constant, except at [math]x=0[/math], where it changes at an infinite rate.

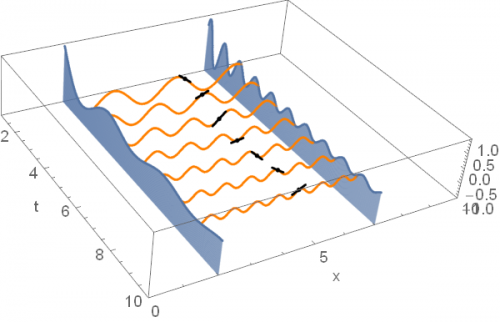

Now, consider the alternative calculation, [math]\int_{0}^{\infty}\frac{\partial}{\partial x}f\left(x,t\right)dt[/math]. In this case, we first calculate how much the function changes with small increments in [math]x[/math] for generic values of [math]t[/math]. For example, we could be calculating the vertical differences in the endpoints of the orange lines of the plot above. Then, we add up these differences along [math]t[/math], by applying the integral.

The integrand of this expression is given by: [math]\frac{\partial}{\partial x}f\left(x,t\right)=\frac{\partial}{\partial x}\frac{\sin\left(tx\right)}{t}=\text{cos}\left(tx\right)[/math]. We have learned that the slope of [math]f[/math] along the [math]x[/math]-axis is periodic. Function [math]\text{cos}\left(tx\right)[/math] represents the information about the slopes, which we represent below through small line segments, along [math]x=5[/math]:

A property of the cosine (and other elementary trigonometric functions) is that, for a given [math]x[/math], the area 'underneath' is also periodic and does not vanish as we approximate infinity. This is a problem: When we take an integral from zero to infinity, the area under [math]\text{cos}\left(tx\right)[/math] does not converge.

Intuitively, the integral adds up the slopes in the [math]x[/math] direction, which keep rotating forever. If these slopes stabilized at some point (for example, if they all approximated zero when [math]t[/math] was large), then the integral would also converge. However, because the slopes keep rotating as [math]t[/math] changes, the integral does not converge and Leibniz rule fails. This issue can only arise when at least one of the limits of integration is infinity.

When this issue does not apply (i.e., the integral of the partial derivative converges), then result

[math]\frac{d}{dx}\int_{a\left(x\right)}^{b\left(x\right)}f\left(x,t\right)dt=\int_{a\left(x\right)}^{b\left(x\right)}\frac{\partial}{\partial x}f\left(x,t\right)dt+f\left(x,b\left(x\right)\right)b'\left(x\right)-f\left(x,a\left(x\right)\right)a'\left(x\right)[/math]

is valid. If [math]a\left(x\right)=\infty[/math] or [math]b\left(x\right)=\infty[/math], we apply identity [math]\frac{d\infty}{dx}=0[/math], thus ignoring the latter terms of the expression.

- For an alternative intuition, see Lecture 2. B) Leibniz Rule II.

Transformations of random variables

Suppose [math]Y=g\left(X\right)[/math], where [math]g:\mathbf{R}\rightarrow\mathbf{R}[/math] is a function and [math]X[/math] is an r.v. with cdf [math]F_{X}[/math].

Clearly, [math]Y[/math] is also a random variable. Its induced probability function is equal to [math]P_{Y}\left(\cdot\right)=P_{X}\circ g^{-1}[/math]. When [math]X[/math] is discrete, it is usually simple to obtain the distribution of [math]Y[/math]. This becomes more complicated in the continuous case.

We consider the cases of strictly monotone transformations here. When transformations are not strictly monotone, the same procedure applies in a piecewise fashion (i.e., one needs to apply it repeatedly to different monotone sections of the transformation).

Affine Transformations: CDF

- Suppose [math]Y=g\left(X\right)=aX+b,a\gt 0,b\in\mathbf{R}[/math].

In order to deduce [math]F_{Y}[/math], we use the probability functions of [math]X[/math] and [math]Y[/math]. Notice first that [math]F_{Y}\left(y\right)=P\left(Y\leq y\right)[/math]. This probability statement can be used to relate the cdf of [math]Y[/math] to the cdf of [math]X[/math]:

[math]P\left(Y\leq y\right)=P\left(aX+b\leq y\right)=P\left(X\leq\frac{y-b}{a}\right)=F_{X}\left(\frac{y-b}{a}\right).[/math]

This is a very useful result: we have related the cdf of a transformed r.v. [math]Y[/math] to the cdf of the transformed variable [math]X[/math]. We have learned that the distribution of [math]Y[/math] is given by the distribution of [math]X[/math], evaluated at a transformed value of the function's argument.

- Now, suppose [math]Y=aX+b[/math] where [math]a\lt 0[/math]. In this case, we obtain

[math]F_{Y}\left(y\right)=P\left(Y\leq y\right)=P\left(aX+b\leq y\right)=P\left(X\geq\frac{y-b}{a}\right)=1-P\left(X\leq\frac{y-b}{a}\right)=1-F_{X}\left(\frac{y-b}{a}\right)[/math].

Affine Transformations: PDF

- Let [math]a\gt 0[/math] and [math]Y=aX+b[/math].

We know [math]F_{Y}\left(y\right)=F_{X}\left(\frac{y-b}{a}\right)[/math], and that [math]f_{Y}\left(y\right)=\frac{d}{dy}F_{Y}\left(y\right).[/math]

By applying Leibniz rule, we obtain

[math]f_{Y}\left(y\right)=\frac{d}{dy}F_{X}\left(\frac{y-b}{a}\right)=f_{X}\left(\frac{y-b}{a}\right)\frac{d}{dy}\frac{y-b}{a}=f_{X}\left(\frac{y-b}{a}\right)\frac{1}{a}[/math].

- If, on the other hand, [math]a\lt 0[/math], we would have [math]F_{Y}\left(y\right)=1-F_{X}\left(\frac{y-b}{a}\right)[/math], and applying Leibniz rule yields [math]f_{Y}\left(y\right)=-f_{X}\left(\frac{y-b}{a}\right)\frac{1}{a}.[/math]

We can write down both of these cases simultaneously, as

[math]f_{Y}\left(y\right)=f_{X}\left(\frac{y-b}{a}\right)\left|\frac{1}{a}\right|[/math], when [math]Y=aX+b[/math] and [math]a\neq 0[/math].

In general, as long as the transformation [math]Y=g\left(X\right)[/math] is monotonic, then

[math]f_{Y}\left(y\right)=f_{X}\left(g^{-1}\left(y\right)\right)\left|\frac{d}{dy}g^{-1}\left(y\right)\right|.[/math]

When it is not, then one can simply apply the formula separately for each monotonic region.

Also, notice that the role of [math]g^{-1}\left(y\right)[/math] is to ensure that the result is expressed as a function of the argument of interest, [math]y[/math], rather than [math]x[/math].

There also exists a formula for transformations of multiple random variables. In this case, rather than multiplying the pdf by a single derivative, one uses the absolute value of the determinant of the Jacobian matrix of the transformations.