Full Lecture 18

Contents

Multicollinearity

Consider the case where [math]X[/math] is given by:

[math]X=\overset{\begin{array}{ccc} \beta_{0} & \beta_{1} & \beta_{2}\end{array}}{\left[\begin{array}{ccc} 1 & 1 & 0\\ 1 & 0 & 1\\ 1 & 1 & 0\\ 1 & 0 & 1\\ 1 & 1 & 0 \end{array}\right]}[/math]

Notice that [math]\beta_{0}=0;\beta_{1}=1;\beta_{2}=1[/math] predict the same value of [math]y_{i}[/math] as [math]\beta_{0}=1;\beta_{1}=0;\beta_{2}=0[/math]. In this case, there is no unique solution for [math]\widehat{\beta}_{OLS}=\text{argmin}_{\beta}\left(y-X\beta\right)^{'}\left(y-X\beta\right).[/math]

Issues may also arise when two variables are almost collinear. In this case, it can become challenging to identify the parameters of two highly correlated variables separately. Moreover, perturbing the data may move significance from one parameter to the other, and often only one of the two parameters will be significant (although removing the significant regressor will make the parameter of the remaining regressor significant).

When one cares only about prediction, then separating two coefficients may not be crucial: The joint effect is what the researcher is interested in this case, and it matters little that the effects from each regressor are hard to separate. On the other hand, if one cares about the specific effects, then there is no easy way around the problem.

Often, multicollinearity arises because of the so-called “dummy trap”. In the example above, [math]x_{1}[/math] could represent young age and [math]x_{2}[/math] could represent old age. By adding all possible cases as well as a constant, we have effectively introduced “too many cases” and induced multicollinearity.

If multicollinearity is not due to the dummy trap, one can use specific regression methods (e.g., ridge regression, principal components regression) that eliminate variables according to specific criteria. These techniques are especially useful in regressions with many variables, sometimes even when [math]K\gt N[/math]. However, they do not solve the issue of separately identifying effects of each variable. Ideally, collecting more or better data will solve the problem.

Partitioned Regression

Partitioned regression is a method to understand how some parameters in OLS depend on others. Consider the decomposition of the linear regression equation

[math]\begin{aligned} & y=X\beta+\varepsilon\\ \Leftrightarrow & y=\left[\begin{array}{cc} X_{1} & X_{2}\end{array}\right]\left[\begin{array}{c} \beta_{1}\\ \beta_{2} \end{array}\right]+\varepsilon\end{aligned}[/math]

Where we are effectively partitioning the parameter vector [math]\beta[/math] into two sub-vectors [math]\beta_{1}[/math] and [math]\beta_{2}[/math].

What is [math]\widehat{\beta}_{1}[/math]?

Starting with the OLS normal equation,

[math]\begin{aligned} & X^{'}X\beta=X^{'}y\\ \Leftrightarrow & \left[\begin{array}{c} X_{1}^{'}\\ X_{2}^{'} \end{array}\right]\left[\begin{array}{cc} X_{1} & X_{2}\end{array}\right]\left[\begin{array}{c} \beta_{1}\\ \beta_{2} \end{array}\right]=\left[\begin{array}{c} X_{1}^{'}\\ X_{2}^{'} \end{array}\right]y\\ \Leftrightarrow & \left[\begin{array}{cc} X_{1}^{'}X_{1} & X_{1}^{'}X_{2}\\ X_{2}^{'}X_{1} & X_{2}^{'}X_{2} \end{array}\right]\left[\begin{array}{c} \beta_{1}\\ \beta_{2} \end{array}\right]=\left[\begin{array}{c} X_{1}^{'}\\ X_{2}^{'} \end{array}\right]y\\ \Leftrightarrow & \left\{ \begin{array}{c} X_{1}^{'}X_{1}\beta_{1}+X_{1}^{'}X_{2}\beta_{2}=X_{1}^{'}y\\ X_{2}^{'}X_{1}\beta_{1}+X_{2}^{'}X_{2}\beta_{2}=X_{2}^{'}y \end{array}\right.\end{aligned}[/math]

Define the equations immediately above as (1) and (2). Now, premultiply the first equation by [math]X_{2}^{'}X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}[/math], to obtain

[math]\begin{aligned} & X_{2}^{'}X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}X_{1}^{'}X_{1}\beta_{1}+X_{2}^{'}X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}X_{1}^{'}X_{2}\beta_{2}=X_{2}^{'}X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}X_{1}^{'}y\\ \Leftrightarrow & X_{2}^{'}X_{1}\beta_{1}+X_{2}^{'}X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}X_{1}^{'}X_{2}\beta_{2}=X_{2}^{'}X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}X_{1}^{'}y\end{aligned}[/math]

Removing the last equation from equation (2) yields:

[math]\left(X_{2}^{'}X_{2}-X_{2}^{'}X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}X_{1}^{'}X_{2}\right)\beta_{2}=\left[X_{2}^{'}-X_{2}^{'}X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}X_{1}^{'}\right]y[/math]

Now, let [math]P_{1}=X_{1}\left(X_{1}^{'}X_{1}\right)^{-1}X_{1}^{'}[/math], to get

[math]\begin{aligned} & X_{2}^{'}\left(I-P_{1}\right)X_{2}\beta_{2}=X_{2}^{'}\left(I-P_{1}\right)y\\ \Leftrightarrow & \widehat{\beta_{2}}=\left[X_{2}^{'}\left(I-P_{1}\right)X_{2}\right]^{-1}X_{2}^{'}\left(I-P_{1}\right)y\end{aligned}[/math]

In order to interpret this equation, we need to understand the meaning of matrix [math]P_{1}[/math]. In linear algebra, this matrix is called a projection matrix.

Projections

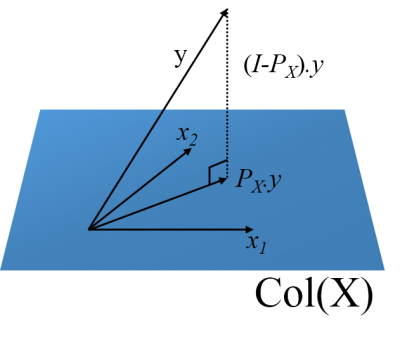

Let [math]P_{X}=X\left(X^{'}X\right)^{-1}X^{'}[/math]. When multiplied by a vector, matrix [math]P_{X}[/math] yields another vector that can be obtained by a weighted sum of vectors in [math]X[/math]. Consider the following representation, which applies to the case where [math]N=3[/math] and [math]K=2[/math].

When multiplied by vector [math]y[/math], matrix [math]P_{x}[/math] yields vector [math]P_{x}y[/math], which lives in the column space of [math]X[/math] . This column space is the space defined by the vectors defined in the columns of [math]X[/math]. Any vector in [math]Col\left(X\right)[/math] can be obtained by weighted sums of the vectors in the column space of [math]X[/math]. In fact, notice that [math]P_{X}y=X\left(X^{'}X\right)^{-1}X^{'}y=X\widehat{\beta}_{OLS}[/math], i.e., it is the OLS prediction of [math]y[/math].

As for matrix [math]I-P_{X}[/math] , this matrix produces a vector that is orthogonal to the column space of [math]X[/math]. In fact, it is given by the vertical dashed vector in the figure above. Notice that

[math]\left(I-P_{X}\right)y=y-\widehat{y}=\widehat{\varepsilon},[/math]

i.e., this matrix produces the vector of estimated residuals, which is orthogonal (in the geometric sense) to the column space of [math]X[/math].

Projections are symmetric and idempotent, the last term meaning that repeated self-multiplication always yields the projection matrix itself.

Partitioned Regression (cont.)

With the knowledge of projection matrices, equation

[math]\widehat{\beta_{2}}=\left[X_{2}^{'}\left(I-P_{1}\right)X_{2}\right]^{-1}X_{2}^{'}\left(I-P_{1}\right)y[/math]

can be rewritten as

[math]\widehat{\beta_{2}}=\left[X_{2}^{*'}X_{2}^{*}\right]^{-1}X_{2}^{*'}y^{*}[/math]

where

[math]X_{2}^{*}=\left(I-P_{1}\right)X_{2}[/math] and [math]y^{*}=\left(I-P_{1}\right)y[/math] (notice that we are using the idempotence property).

Notice that [math]y^{*}=\left(I-P_{1}\right)y[/math] are the residuals from regressing [math]y[/math] on [math]X_{1}[/math], and [math]X_{2}^{*}=\left(I-P_{1}\right)X_{2}[/math] are the residuals from regressing each of the variables in [math]X_{2}[/math] on [math]X_{1}[/math]. Finally, [math]\widehat{\beta_{2}}[/math] is obtained from regressing the residuals [math]y^{*}[/math] on [math]X_{2}^{*}[/math].

More than a clarification of how OLS operates, partitioned regression can be used to inform the variance of two-stage estimators in which estimation requires plugging in first stage estimates into a second stage where additional estimates are produced. It can also be used to inform variable selection problems.

Gauss-Markov Theorem

The Gauss Markov theorem is an important result for the OLS estimator. It does not depend on asymptotics or normality assumptions. It states that, in the linear regression model - which does include the homoskedasticity assumption - [math]\widehat{\beta}_{OLS}[/math] is the minimum variance linear unbiased estimator (BLUE) of [math]\beta[/math].

The proof is not hard. We consider the case where [math]X[/math] is fixed (i.e., all expressions are conditioned on [math]X[/math]; [math]\varepsilon[/math] is the random variable).

Proof

Let

[math]\begin{aligned} \widehat{\beta}_{OLS} & =\left(X^{'}X\right)^{-1}X^{'}y\\ \widetilde{\beta} & =Cy\end{aligned}[/math]

where [math]\widetilde{\beta}[/math] is some alternative linear estimator, with some matrix [math]C[/math] with dimensions [math]\left(N\times K\right)[/math].

For [math]\widetilde{\beta}[/math] to be unbiased, we require that

[math]\begin{aligned} & E_{\beta}\left(\widetilde{\beta}\right)=\beta\\ \Leftrightarrow & E_{\beta}\left(Cy\right)=\beta\\ \Leftrightarrow & E_{\beta}\left(C\left(X\beta+\varepsilon\right)\right)=\beta\\ \Leftrightarrow & CX\beta=\beta\end{aligned}[/math]

because [math]E_{\beta}\left(\varepsilon\right)=0[/math]. Notice that for the equation above to hold, we require [math]CX=I[/math].

Let us now calculate the variance of [math]\widetilde{\beta}[/math]:

[math]Var_{\beta}\left(\widetilde{\beta}\right)=Var_{\beta}\left(C\varepsilon\right)=CC^{'}\sigma^{2}[/math]

Now, define [math]D[/math] as the difference between the “slopes” of [math]\widetilde{\beta}[/math] and [math]\widehat{\beta}_{OLS}[/math], s.t. [math]D=C-\left(X^{'}X\right)^{-1}X^{'}[/math]. Using this definition, we can rewrite [math]Var_{\beta}\left(\widetilde{\beta}\right)[/math] as

[math]\begin{aligned} Var_{\beta}\left(\widetilde{\beta}\right) & =CC^{'}\sigma^{2}\\ & =\left(D+\left(X^{'}X\right)^{-1}X^{'}\right)\left(D+\left(X^{'}X\right)^{-1}X^{'}\right)^{'}\sigma^{2}\\ & =DD^{'}\sigma^{2}+\underset{=0}{\underbrace{DX\left(X^{'}X\right)^{-1}\sigma^{2}+\left(X^{'}X\right)^{-1}X^{'}D^{'}\sigma^{2}}}+\underset{Var\left(\widehat{\beta}_{OLS}\right)}{\underbrace{\left(X^{'}X\right)^{-1}\sigma^{2}}}\end{aligned}[/math]

The last term equals the variance of the OLS estimator. The second and third terms equal zero each, because

[math]\left.\begin{array}{c} CX=I\\ CX=DX+I \end{array}\right\} \Rightarrow DX=0[/math]

where the first equation is an implication of unbiasedeness, and the second one follows from postmultiplying the definition of [math]D[/math] by [math]X[/math].

Hence, we have learned that

[math]Var_{\beta}\left(\widetilde{\beta}\right)=Var\left(\widehat{\beta}_{OLS}\right)+DD^{'}\sigma^{2}.[/math]

Because [math]DD^{'}[/math] is a positive semidefinite matrix, by definition, [math]Var_{\beta}\left(\widetilde{\beta}\right)\geq Var\left(\widehat{\beta}_{OLS}\right).[/math]

Finally, note that we did not make assumptions about the distribution of [math]\varepsilon[/math]. When [math]\varepsilon\sim N\left(0,\sigma^{2}\right)[/math], then [math]\widehat{\beta}_{OLS}[/math] attains the Cramer-Rao lower bound: In this case, [math]\widehat{\beta}_{OLS}[/math] is the best unbiased estimator (BUE), i.e., even non-linear estimators cannot be more efficient than OLS.